2019-05-04 10:40 — By Erik van Eykelen

Algolia Index Ingestion Based on Amazon S3, SQS, and Apache Tika

Recently I created a proof-of-concept for a client to show that a combination of Amazon S3, Amazon SQS, Apache Tika, a Ruby-based Heroku worker, and Algolia can be used to create a surprisingly powerful search engine index ingestion solution with little effort.

By “search engine index ingestion” I mean the process of adding data from documents (.doc, .pdf) and text-based content to a search engine index.

Here’s the bill of materials for the proof-of-concept:

- Amazon S3 is used as the ingestion entry point for text, images, and documents.

- Amazon SQS is used as a message queue service.

- A Ruby-based worker is used to process each SQS message.

- Apache Tika is used to extract text from e.g. Office and PDF documents.

- Algolia.com is used to store, index, and retrieve indexed content.

A Ruby on Rails-based web endpoint was used to present rudimentary search results.

This basic search services consists of less than 200 lines of Ruby code and two dozen lines of AWS policy configuration.

Features

The proof-of-concept had to demonstrate several things:

- Is it possible to use Algolia in a multi-tenant situation?

- Can multiple, stand-alone applications make use of the same ingestion process?

- Is it easy to add, update, and delete content from the index?

- Is it possible to index the contents of Word and PDF documents?

- Is it possible to index HTML, Markdown, and plain text?

- Is it possible to work with multiple languages?

- How difficult is it to correlate search results with e.g. URLs in our web app or screens in our mobile app?

File-based API

For the proof-of-concept I chose S3 to act as a file-based API:

- It’s easy to upload a file to S3 in any programming language.

- S3 supports multi-part uploads for large files.

The ingestion process behind this file-based API uses the following components:

- Your app uploads a JSON manifest to S3 (see below for examples).

- S3 sends a trigger to SQS for every uploaded

.jsonfile. The trigger causes SQS to add the S3 upload event to its queue. - The SQS queue is periodically polled by the Ruby worker. It processes every event by downloading the JSON manifest from S3, optionally downloading the associated file from S3.

- In case a file was uploaded its content is extracted using Apache Tika.

- The extracted text is submitted to the Algolia API.

- Algolia updates its index based on add, update, or delete API requests (I used algoliasearch-rails for this).

The following example shows how a plain text snippet can be added to the index:

{

"tenant_urn": "urn:example-app:example-company-dc1294fceb07b30102a5",

"source_urn": "urn:example-app:post:body:dd9dd35530e76603c875",

"operation": "create",

"locale": "en",

"content_body": "Hello world, this is my first indexed post."

}

tenant_urncontains a user-defined value which is used by the search engine to compartmentalize search results.source_urncontains a user-defined value which helps us correlate search results with features in our app. In this exampleurn:example-app:post:body:dd9dd35530e76603c875might point tohttps://app.example.com/posts/dd9dd35530e76603c875. I prefer URNs over raw URLs because URLs may change over time, while well-considered URNs have a much longer shelf life.- The

createoperation tells the index to add the value ofcontent_bodyto the index. Two other supported operations areupdateanddelete. - The

localevalue is used to tag the entry in Algolia with the valueen. This enables us to filter queries by language.

Adding the contents of a PDF file is just as easy:

{

"tenant_urn": "urn:example-app:example-company-dc1294fceb07b30102a5",

"source_urn": "urn:example-app:resume:attachment:711dc4b9b481a5749e67",

"operation": "create",

"locale": "en",

"title": "Résumé Joe Doe 2019.pdf",

"content_s3_region": "eu-west-1",

"content_s3_bucket": "search-proof-of-concept-test",

"content_s3_key": "834cb938-d0a3-404b-a15a-bf7a5a238ce6.pdf"

}

Indexing a file is a two-step process:

- First you upload a document (PDF, Office, etc.) to S3 (tip: use a GUID for the file name).

- Then you upload a JSON file to S3 with

content_s3_region,content_s3_bucket, andcontent_s3_keyset to the values of the document you uploaded in step 1. Processing of the document and its associated JSON file is resilient against “out of order” upload completion because the worker simply ignores the manifest if the associated file is not yet present.

Amazon settings

Making the proper settings in Amazon is always challenging (at least for me, it’s not my daily work). I’ve summarized the settings below:

Add an SQS event to S3:

Select the event types you want to receive, select the file extension (optional), select SQS Queue, and select the SQS instance you have prepared:

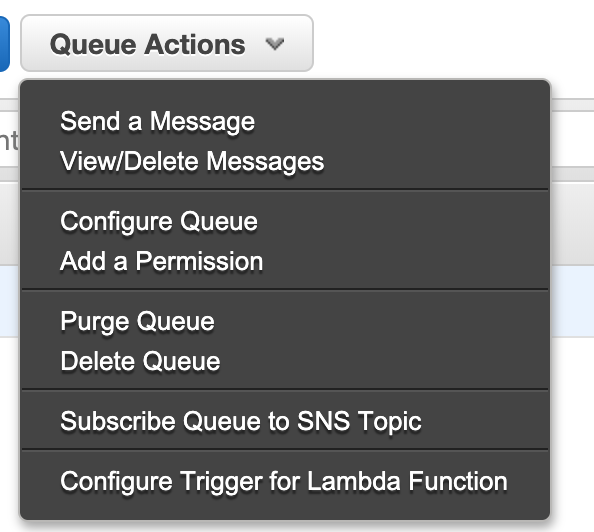

In your SQS instance ensure your S3 user has access to it:

Use “View/Delete Messages” to test if your S3-to-SQS pipeline is working:

Algolia

This proof-of-concept uses Algolia to index our content. There is not much to say about this part because it is ridiculously easy to set it up, have your content indexed, and return search results. And it is blazingly fast to boot.

The concept used just one model called Page. The model code looks like this:

class Page < ApplicationRecord

include AlgoliaSearch

algoliasearch index_name: "pages" do

attribute :tenant_urn

attribute :source_urn

attribute :locale

attribute :tags

attribute :title

attribute :body

end

end

The only other thing you need to do (in a Rails app) is adding your credentials to ENV vars and include the algoliasearch-rails gem. See https://github.com/algolia/algoliasearch-rails for documentation.

Code

Code snippets created for this proof-of-concept are listed below: